Portfolio item number 1

Short description of portfolio item number 1

Short description of portfolio item number 1

Short description of portfolio item number 2

Published in AAAI-22 Workshop on Adversarial Machine Learning and Beyond, 2022

Julia Grabinski, Janis Keuper, Margret Keuper

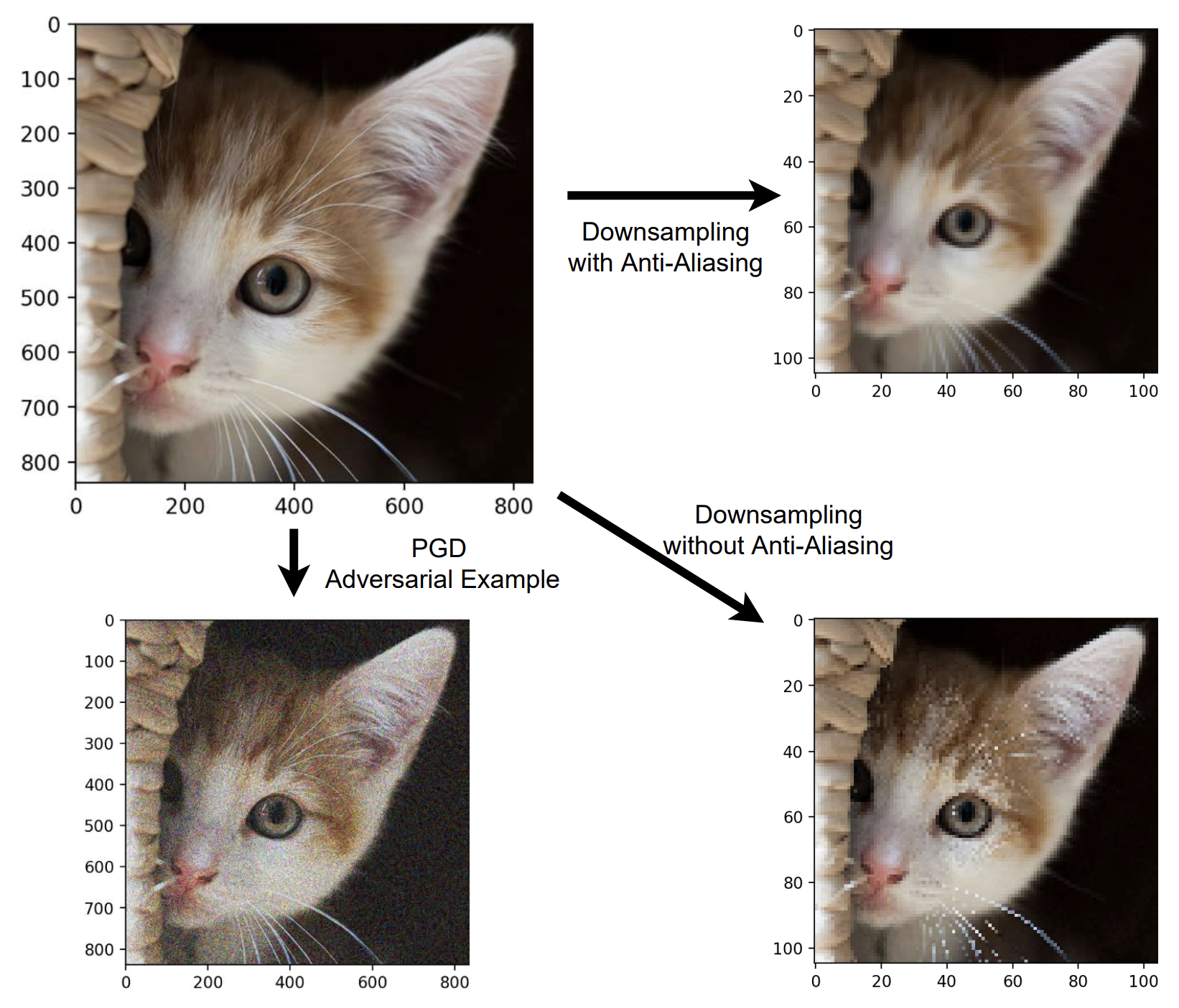

Many commonly well-performing convolutional neural network models have shown to be susceptible to input data perturbations, indicating a low model robustness. Adversarial attacks are thereby specifically optimized to reveal model weaknesses, by generating small, barely perceivable image perturbations that flip the model prediction. Robustness against attacks can be gained for example by using adversarial examples during training, which effectively reduces the measurable model attackability. In contrast, research on analyzing the source of a model’s vulnerability is scarce. In this paper, we analyze adversarially trained, robust models in the context of a specifically suspicious network operation, the downsampling layer, and provide evidence that robust models have learned to downsample more accurately and suffer significantly less from aliasing than baseline models.

@inproceedings{ grabinski2022aliasing, title={Aliasing coincides with {CNN}s vulnerability towards adversarial attacks}, author={Julia Grabinski and Janis Keuper and Margret Keuper}, booktitle={The AAAI-22 Workshop on Adversarial Machine Learning and Beyond}, year={2022}, url={https://openreview.net/forum?id=vKc1mLxBebP} }

Download here

Published in European Conference on Machine Learning, 2022

Julia Grabinski, Janis Keuper, Margret Keuper

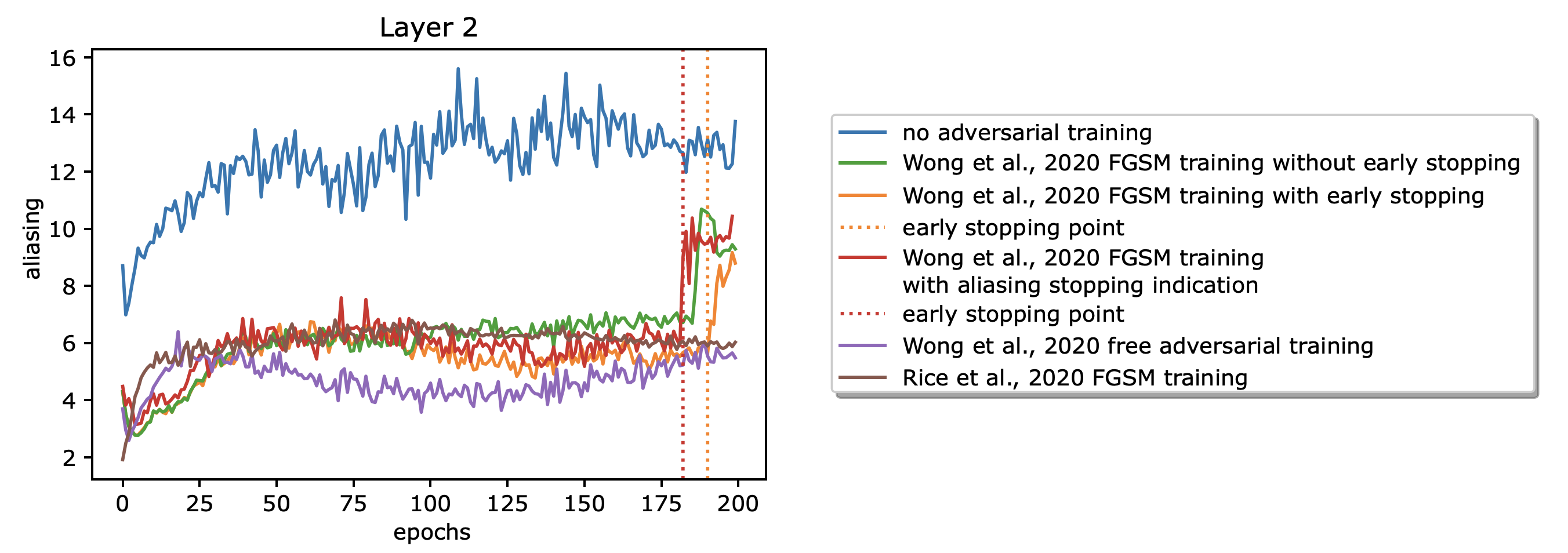

Many commonly well-performing convolutional neural network models have shown to be susceptible to input data perturbations, indicating a low model robustness. To reveal model weaknesses, adversarial attacks are specifically optimized to generate small, barely perceivable image perturbations that flip the model prediction. Robustness against attacks can be gained by using adversarial examples during training, which in most cases reduces the measurable model attackability. Unfortunately, this technique can lead to robust overfitting, which results in non-robust models. In this paper, we analyze adversarially trained, robust models in the context of a specific network operation, the downsampling layer, and provide evidence that robust models have learned to downsample more accurately and suffer significantly less from downsampling artifacts, aka. aliasing, than baseline models. In the case of robust overfitting, we observe a strong increase in aliasing and propose a novel early stopping approach based on the measurement of aliasing.

@article{grabinski2022aliasing, title={Aliasing and adversarial robust generalization of cnns}, author={Grabinski, Julia and Keuper, Janis and Keuper, Margret}, journal={Machine Learning}, volume={111}, number={11}, pages={3925–3951}, year={2022}, publisher={Springer} }

Download here

Published in European Conference on Computer Vision, 2022

Julia Grabinski, Stefan Jung, Janis Keuper, Margret Keuper

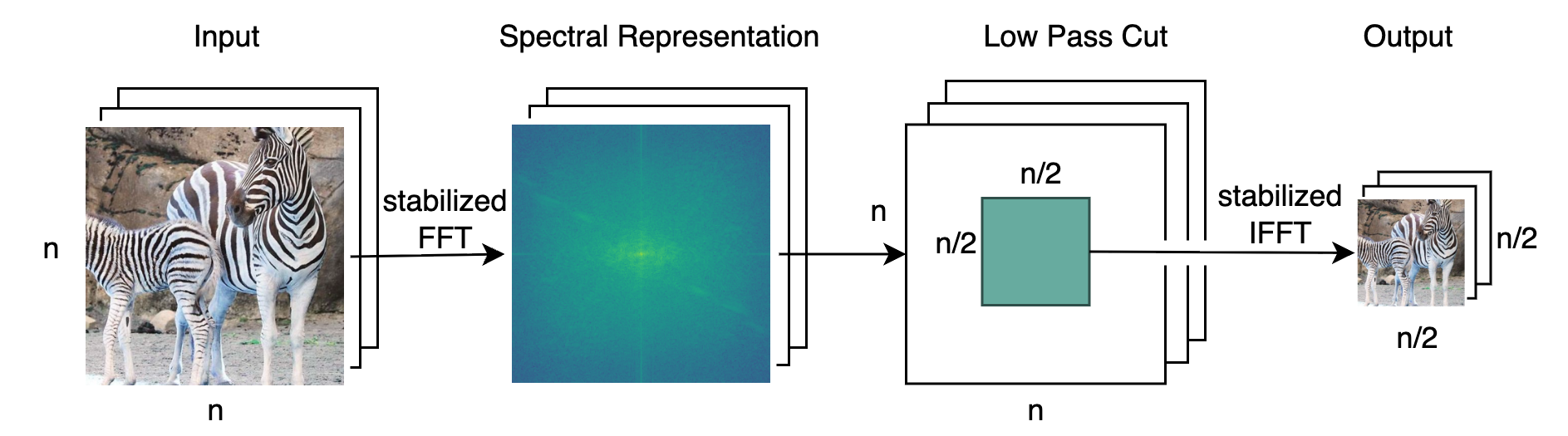

Over the last years, Convolutional Neural Networks (CNNs) have been the dominating neural architecture in a wide range of computer vision tasks. From an image and signal processing point of view, this success might be a bit surprising as the inherent spatial pyramid design of most CNNs is apparently violating basic signal processing laws, i.e. Sampling Theorem in their down-sampling operations. However, since poor sampling appeared not to affect model accuracy, this issue has been broadly neglected until model robustness started to receive more attention. Recent work in the context of adversarial attacks and distribution shifts, showed after all, that there is a strong correlation between the vulnerability of CNNs and aliasing artifacts induced by poor down-sampling operations. This paper builds on these findings and introduces an aliasing free down-sampling operation which can easily be plugged into any CNN architecture: FrequencyLowCut pooling. Our experiments show, that in combination with simple and fast FGSM adversarial training, our hyper-parameter free operator significantly improves model robustness and avoids catastrophic overfitting.

@inproceedings{ grabinski2022frequencylowcut, title={Frequencylowcut pooling-plug and play against catastrophic overfitting}, author={Grabinski, Julia and Jung, Steffen and Keuper, Janis and Keuper, Margret}, booktitle={European Conference on Computer Vision}, pages={36–57}, year={2022}, organization={Springer} }

Download here

Published in Advances in Neural Information Processing Systems, 2022

Julia Grabinski, Paul Gavrikov, Janis Keuper, Margret Keuper

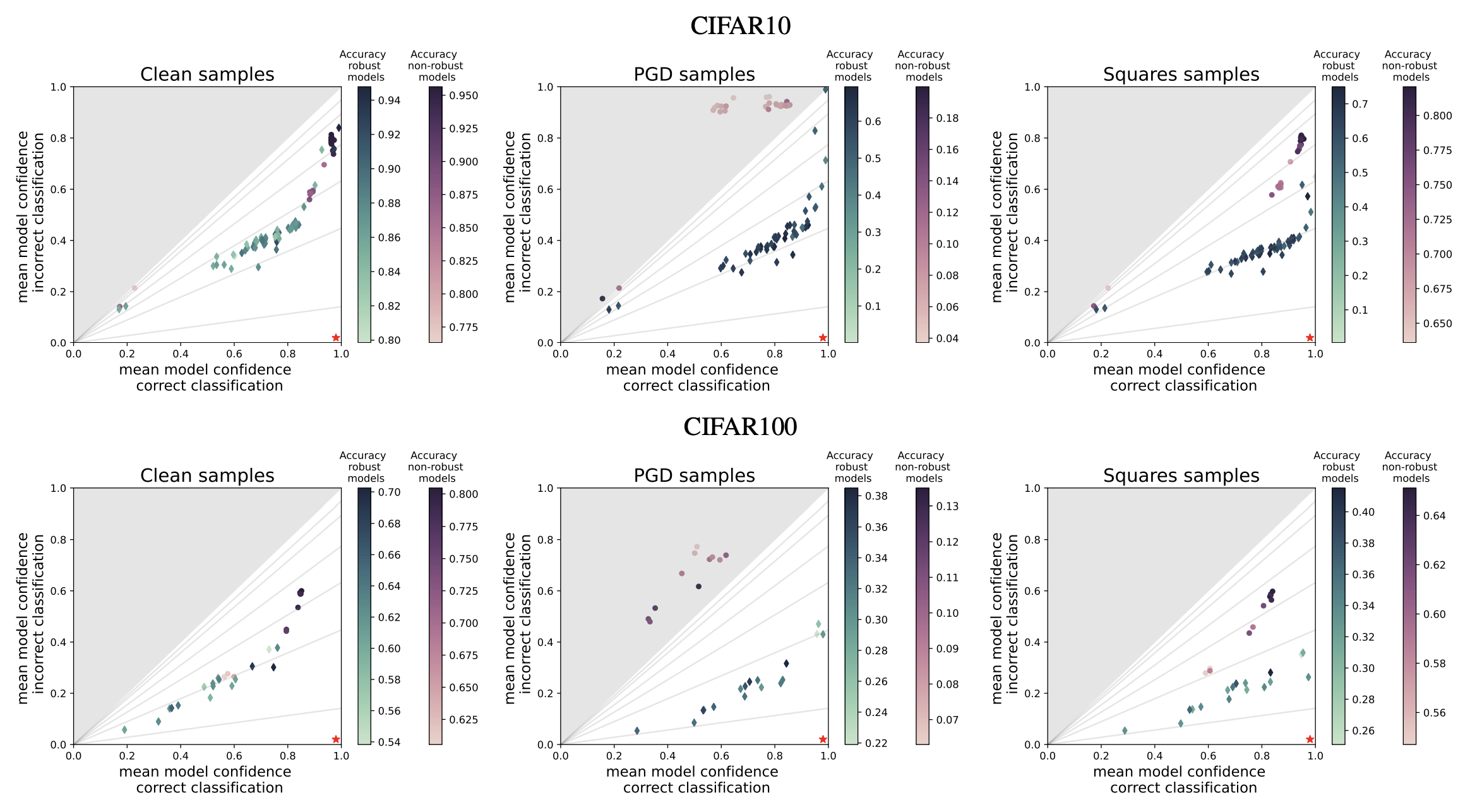

Despite the success of convolutional neural networks (CNNs) in many academic benchmarks for computer vision tasks, their application in the real-world is still facing fundamental challenges. One of these open problems is the inherent lack of robustness, unveiled by the striking effectiveness of adversarial attacks. Current attack methods are able to manipulate the network’s prediction by adding specific but small amounts of noise to the input. In turn, adversarial training (AT) aims to achieve robustness against such attacks and ideally a better model generalization ability by including adversarial samples in the trainingset. However, an in-depth analysis of the resulting robust models beyond adversarial robustness is still pending. In this paper, we empirically analyze a variety of adversarially trained models that achieve high robust accuracies when facing state-of-the-art attacks and we show that AT has an interesting side-effect: it leads to models that are significantly less overconfident with their decisions, even on clean data than non-robust models. Further, our analysis of robust models shows that not only AT but also the model’s building blocks (like activation functions and pooling) have a strong influence on the models’ prediction confidences.

@inproceedings{ grabinski2022robust, title={Robust Models are less Over-Confident}, author={Julia Grabinski and Paul Gavrikov and Janis Keuper and Margret Keuper}, booktitle={Advances in Neural Information Processing Systems}, editor={Alice H. Oh and Alekh Agarwal and Danielle Belgrave and Kyunghyun Cho}, year={2022}, url={https://openreview.net/forum?id=5K3uopkizS} }

Download here

Published in International Conference on Computer Vision Workshops, 2023

Shashank Agnihotri, Kanchana Vaishnavi Gandikota, Julia Grabinski, Paramanand Chandramouli, Margret Keuper

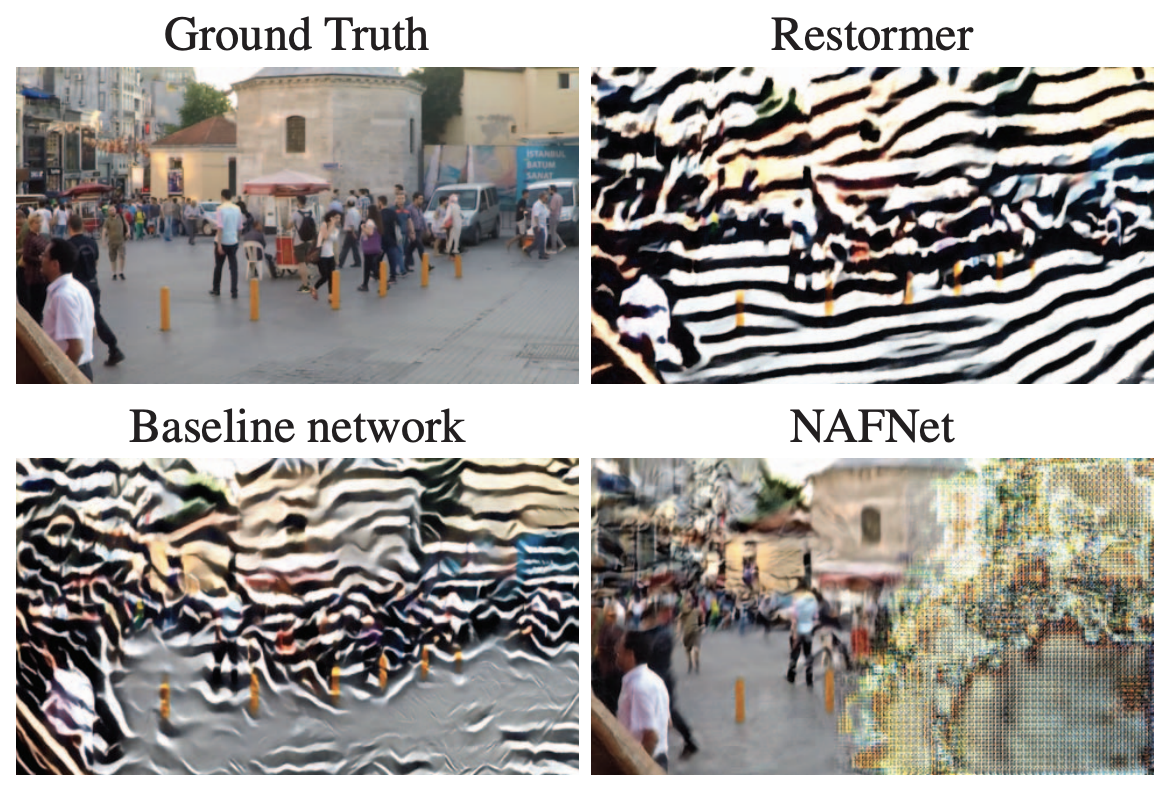

Following their success in visual recognition tasks, Vision Transformers (ViTs) are being increasingly employed for image restoration. As a few recent works claim that ViTs for image classification also have better robustness properties, we investigate whether the improved adversarial robustness of ViTs extends to image restoration. We consider the recently proposed Restormer model, as well as NAFNet and the” Baseline network” which are both simplified versions of a Restormer. We use Projected Gradient Descent (PGD) and CosPGD for our robustness evaluation. Our experiments are performed on real-world images from the GoPro dataset for image deblurring. Our analysis indicates that contrary to as advocated by ViTs in image classification works, these models are highly susceptible to adversarial attacks. We attempt to find an easy fix and improve their robustness through adversarial training. While this yields a significant increase in robustness for Restormer, results on other networks are less promising. Interestingly, we find that the design choices in NAFNet and Baselines, which were based on iid performance, and not on robust generalization, seem to be at odds with the model robustness.

@InProceedings{Agnihotri_2023_ICCV, author = {Agnihotri, Shashank and Gandikota, Kanchana Vaishnavi and Grabinski, Julia and Chandramouli, Paramanand and Keuper, Margret}, title = {On the Unreasonable Vulnerability of Transformers for Image Restoration - and an easy fix}, booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops}, month = {October}, year = {2023}, pages = {3707-3717} }

Download here

Published in Transactions on Machine Learning Research Featured Certification, 2024

Julia Grabinski, Janis Keuper, Margret Keuper

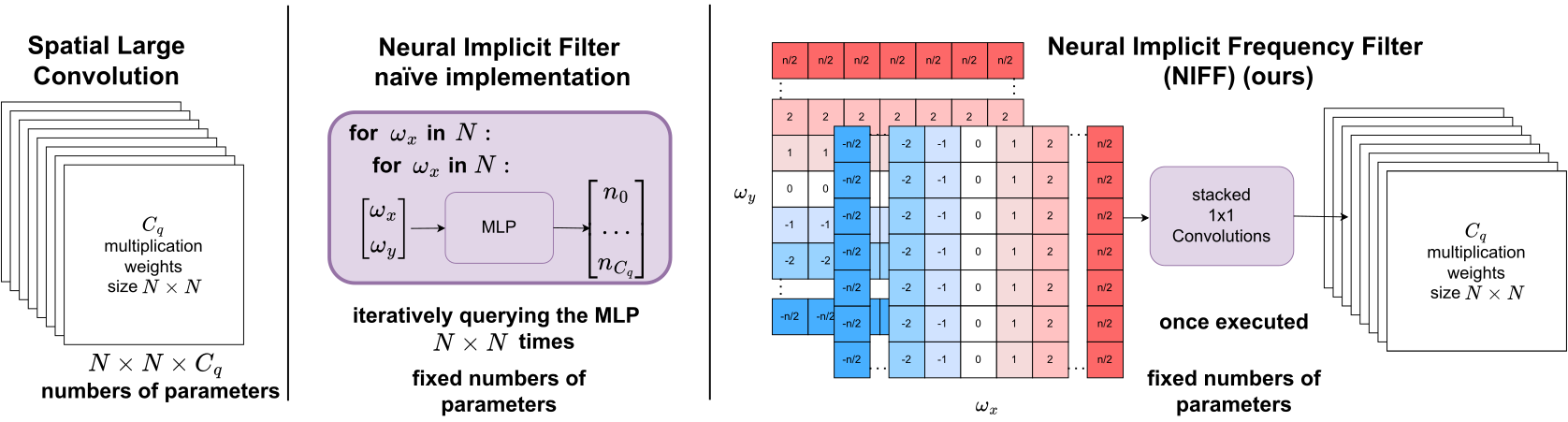

Recent work in neural networks for image classification has seen a strong tendency towards increasing the spatial context during encoding. Whether achieved through large convolution kernels or self-attention, models scale poorly with the increased spatial context, such that the improved model accuracy often comes at significant costs. In this paper, we propose a module for studying the effective filter size of convolutional neural networks (CNNs). To facilitate such a study, several challenges need to be addressed: (i) we need an effective means to train models with large filters (potentially as large as the input data) without increasing the number of learnable parameters, (ii) the employed convolution operation should be a plug-and-play module that can replace conventional convolutions in a CNN and allow for an efficient implementation in current frameworks, (iii) the study of filter sizes has to be decoupled from other aspects such as the network width or the number of learnable parameters, and (iv) the cost of the convolution operation itself has to remain manageable i.e. we can not naïvely increase the size of the convolution kernel. To address these challenges, we propose to learn the frequency representations of filter weights as neural implicit functions, such that the better scalability of the convolution in the frequency domain can be leveraged. Additionally, due to the implementation of the proposed neural implicit function, even large and expressive spatial filters can be parameterized by only a few learnable weights. Interestingly, our analysis shows that, although the proposed networks could learn very large convolution kernels, the learned filters are well localized and relatively small in practice when transformed from the frequency to the spatial domain. We anticipate that our analysis of individually optimized filter sizes will allow for more efficient, yet effective, models in the future.

@inproceedings{

grabinski2024large,

title={As large as it gets-studying infinitely large convolutions via neural implicit frequency filters},

author={Grabinski, Julia and Keuper, Janis and Keuper, Margret},

journal={Transactions on Machine Learning Research},

volume={2024},

pages={1--42},

year={2024},

publisher={TMLR},

note = {Featured Certification} }

Download here

Published in European Conference on Computer Vision, 2024

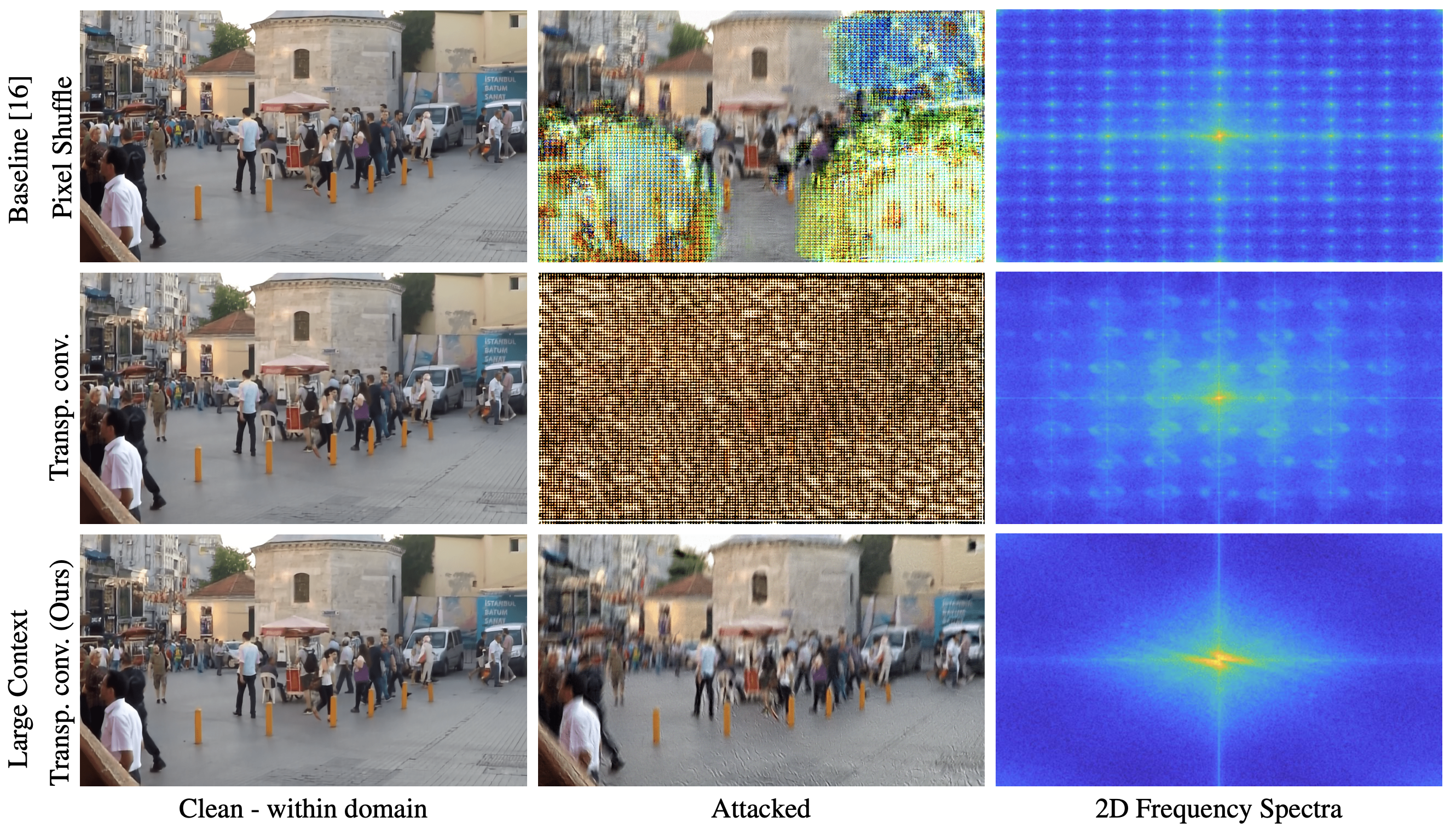

Shashank Agnihotri, Julia Grabinski, Margret Keuper

Pixel-wise predictions are required in a wide variety of tasks such as image restoration, image segmentation, or disparity estimation. Common models involve several stages of data resampling, in which the resolution of feature maps is first reduced to aggregate information and then increased to generate a high-resolution output. Previous works have shown that resampling operations are subject to artifacts such as aliasing. During downsampling, aliases have been shown to compromise the prediction stability of image classifiers. During upsampling, they have been leveraged to detect generated content. Yet, the effect of aliases during upsampling has not yet been discussed w.r.t. the stability and robustness of pixel-wise predictions. While falling under the same term (aliasing), the challenges for correct upsampling in neural networks differ significantly from those during downsampling: when downsampling, some high frequencies can not be correctly represented and have to be removed to avoid aliases. However, when upsampling for pixel-wise predictions, we actually require the model to restore such high frequencies that can not be encoded in lower resolutions. The application of findings from signal processing is therefore a necessary but not a sufficient condition to achieve the desirable output. In contrast, we find that the availability of large spatial context during upsampling allows to provide stable, high-quality pixel-wise predictions, even when fully learning all filter weights.

@inproceedings{ agnihotri2024improving, title={Improving feature stability during upsampling–spectral artifacts and the importance of spatial context}, author={Agnihotri, Shashank and Grabinski, Julia and Keuper, Margret}, booktitle={European Conference on Computer Vision}, pages={357–376}, year={2024}, organization={Springer} }

Download here

Published:

This is a description of your talk, which is a markdown files that can be all markdown-ified like any other post. Yay markdown!

Published:

This is a description of your conference proceedings talk, note the different field in type. You can put anything in this field.

Undergraduate course, University 1, Department, 2014

This is a description of a teaching experience. You can use markdown like any other post.

Workshop, University 1, Department, 2015

This is a description of a teaching experience. You can use markdown like any other post.